We made Unifier 20x faster

And possibly even faster now that we have Unifier v3.

Sometimes we kept feeling like Unifier was just way too slow. It would take a second or two to bridge our messages to every server, and with attachments involved it became even worse. So when we kept optimizing our bot and gave its bridge extension a whole rewrite, the unexpected happened.

We never thought this would happen, but with recent upgrades and optimization experiments, we've somehow managed to make Unifier a whole 12.5x faster than Unifier v0.3.0. We're not joking around (even we couldn't believe it), these are real performance figures, tested in real-world environments.

How we measured it

To get a rough measure of the speed, we first measured how long (in seconds) it would take Unifier to bridge messages to Discord with Platform multisend and Threaded bridging v2 experiments enabled. Then, we divided the time we got by how many copies were created by bridging the message. And finally, we divided 1 by the time we got to see how many messages per second (MPS) Unifier could handle.

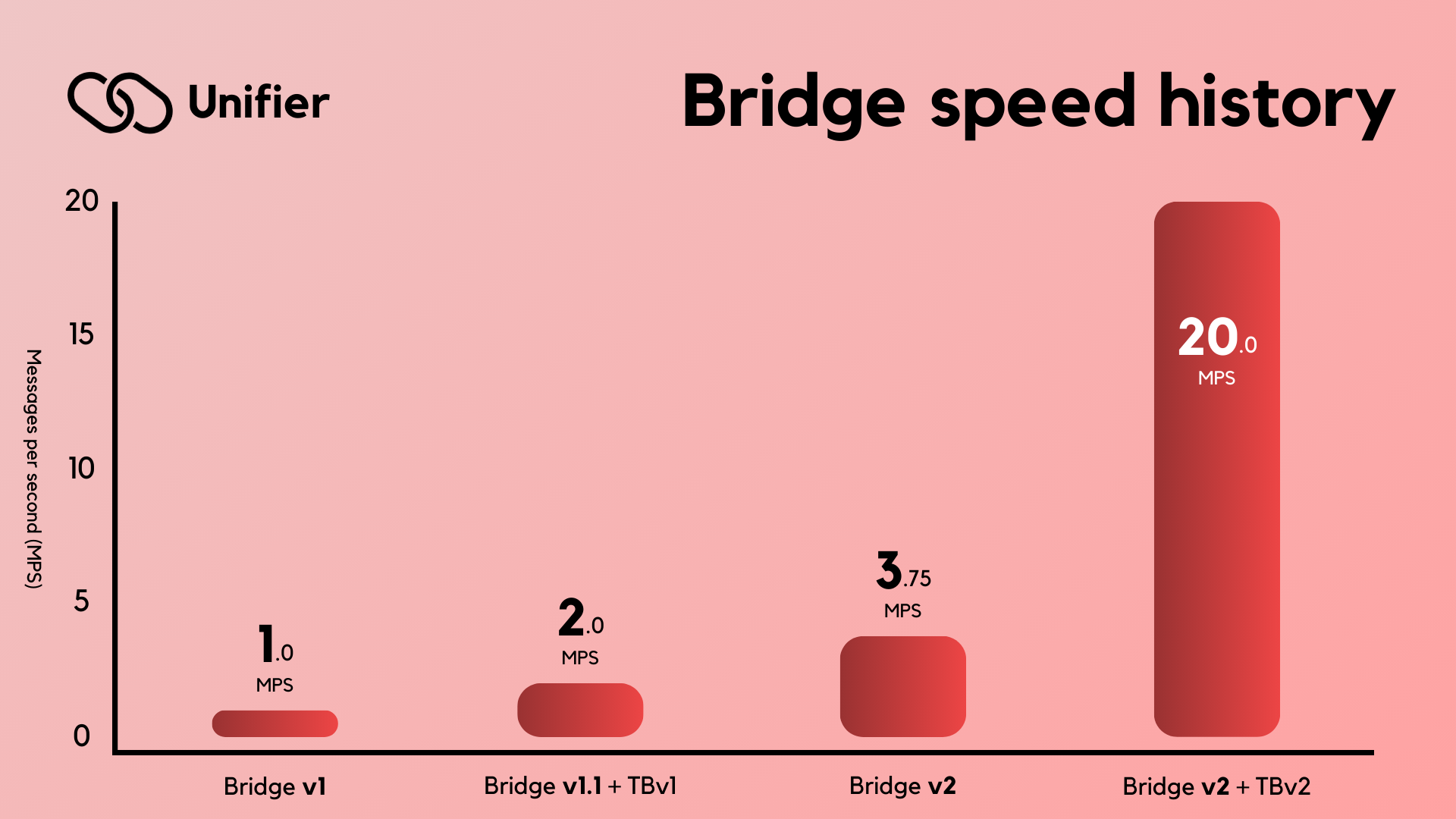

For v0.3.0 and older (Bridge v1), this was around 1.0MPS.

For v0.4.0 (Bridge v1.1 + TBv1), this was around 2.0MPS.

For v1.1.0 (Bridge v2), this was around 3.75MPS.

And lastly, for v1.1.1 (Bridge v2 + TBv2), this was a whopping 20.0MPS, with it reaching 20.3MPS peak.

This means that over the course of a day, we've improved Unifier's bridging speed by 3.33x, over the course of a month by 6.25x, and over the course of Unifier's existence by 20.3x. In the graph below, the max speed consistently achieved for the three versions have been shown below:

So what speeds should I expect?

Almost always: 10MPS

Most of the time: 15MPS

Sometimes: 20MPS

On a very good day: 20.3MPS

In a nutshell: you will almost always get superfast bridging, powered by Bridge v2 and TBv2. It might even the fastest speeds ever for a bridge bot, we gotta verify that sometime.

Testing limitations

We did not consider Revolt and Guilded messages, as our userbase is too small on these platforms to have a reliable sample size.

The results do not take into account the delay between Discord registering the message to its servers and the message being displayed to the client.

The results do not take into account potential lag spikes. We considered these as outliers, as we wanted to test the best performance Unifier could show on a consistent and frequent basis, rather than the average or absolute best performance.

The results do not take into account additional delay caused by sending media. Our testing data is based on messages sent without any attachments.

Why the count was updated

In a previous version of the article, we claimed that Bridge v2 with TBv2 had up to 12.5MPS bridging performance. However, after reviewing newer data, we have updated our count.

When we first tested the speed, we did not have as much data as we have right now, so we believed that 12.5MPS is the highest it could get. However, new data revealed that Unifier's peak bridging speed is not in fact 12.5MPS, but rather 20.3MPS, with it being able to consistently reach 20MPS.

How we did it

There's a lot of things we did under the hood to get Unifier's speed to where it is today. Some were intentionally, but some were unintentionally contributing to Unifier's bridging speed.

Threaded bridging

One thing we noticed is that sending messages via webhooks took quite some time compared to regular messages, so we had to wait quite a bit before sending another message. This further increased the time we needed in addition to the processing time.

So we thought: "what if we sent the messages all at once?"

Using threads to send messages was a whole new concept for us. I (Green) may have worked on Nevira and a few other Discord bots before, but never in my life as a Discord bot developer have I ever attempted to use threads to send a message. The only multiprocessing I've ever added to my bots was just some code to stop resource-heavy functions from holding up the entire bot.

But when we pulled it off, it helped Unifier bring down the delay by quite a bit. But this still wasn't enough, which is where our next optimization comes into play.

Webhook caching

Fetching the webhooks from Discord's API was necessary for Unifier to function, because it uses webhooks to bridge messages between servers. But fetching this took quite a long time, similar to how long it took for Unifier to send a message to Discord through webhooks.

So we decided to cache these webhooks, so we wouldn't need to fetch these from the API every time we needed them. This would save us a few API calls, and speed things up a lot per message.

And thanks to Threaded bridging and Webhook caching, we made our first jump from 1MPS to 2MPS.

Bridge rewrite

Originally, our bridging system was very poorly written. We gave it a little rewrite to the foundation that Unifier really needed at v0.3.0, but that was mainly for adding support for more rooms, managing moderators, etc. So between v0.1.0 and v0.3.5, you could say that the speed was pretty much identical. Speed only started to truly pick up pace when we introduced Threaded Bridging and some optimizations in v0.4.0.

After we introduced Revolt Support in v1.0.0, we noticed that the bridge right now was way too poorly programmed. It took us a lot more time than it took us to add Guilded Support, and the bridge was a key factor. There was no function for bridging messages, all the code was within an on_message event so we had to spend even more time writing the Revolt-to-External bridge in the Revolt Support extension. So before we added Guilded Support, we thought we might as well give the bridge a clean start. And with that came quite the performance boost.

Bridge v2 was built to be more stable, reliable, and flexible, so we had to deal with less "Replying to [unknown]"s and less hassle adding more platforms under Unifier's belt. We also got rid of lots of redundant code, so we could optimize the bot even further. If Bridge v1.1 is a high speed line between Discord and Revolt, then you could say Bridge v2 is a higher speed line between Discord and Revolt, with upgradability so other platforms can connect to the line without building separate lines to the existing platforms.

And thanks to this, we jumped from 2MPS to 3.75MPS. And with Threaded Bridging v2, we further jumped to 20.3MPS.

More improvements we've made after we wrote this

We've added a lot more optimizations to Unifier ever since we wrote this blog post.

Added uvloop

We're now using uvloop on Optimized and Balanced installations of Unifier to speed up asyncio operations throughout the bot. On Windows, Unifier will use winloop.

Multi-core bridging

Due to compatibility issues, this is not available on Windows systems.

Instead of just using asyncio and uvloop/winloop, we've also added aiomultiprocess to take advantage of multiple cores on the host system. So instead of running things concurrently on one core, we've decided to spread the jobs across all cores to enhance bridging performance even further.

This won't really make much of a difference for small Rooms, but it will if you have a lot of servers connected to the same Room.

Platform multisend

Instead of just making things buttery smooth on Discord, we've made it so for other platforms as well through multisend. Instead of waiting for one platform to finish bridging to, we just bridge to all platforms at the same time to improve bridging times. Now messages on Revolt and Guilded won't take so long to arrive because Discord kept holding up the queue.

How do I get these speed boosts?

All performance optimizations should be already online on your servers today! Some were previously experiments, but now they've matured and they are available to all. Enjoy hyperfast bridging speeds!